Understanding LLM Hallucinations

Language models, such as those powered by advanced AI, have revolutionized the way we interact with technology. From answering questions to generating creative content, these models have a wide array of applications. However, a persistent challenge remains: LLM (Large Language Model) hallucinations. In this blog post, we will delve into the problems associated with LLM hallucinations, provide examples, and then reveal the best-kept secret to mitigating these issues. Lets discover how to avoid LLM hallucinations.

What Are LLM Hallucinations?

LLM hallucinations occur when a language model generates information that appears to be factual or contextually appropriate but is actually incorrect, misleading, or entirely fabricated. These hallucinations can range from minor inaccuracies to completely invented data that has no basis in reality.

The Problems with LLM Hallucinations

Impact on Trust and Reliability: One of the biggest concerns with LLM hallucinations is their impact on the trust and reliability of AI-generated content. When users encounter incorrect information, their confidence in the technology diminishes. This is especially problematic in critical fields like healthcare, law, and finance, where accurate information is paramount.

Spread of Misinformation: LLM hallucinations can contribute to the spread of misinformation. In the age of information, where news and data circulate rapidly, even a small inaccuracy can lead to significant misunderstandings and false beliefs. This ripple effect can have serious consequences on public opinion and decision-making.

Example of LLM Hallucination

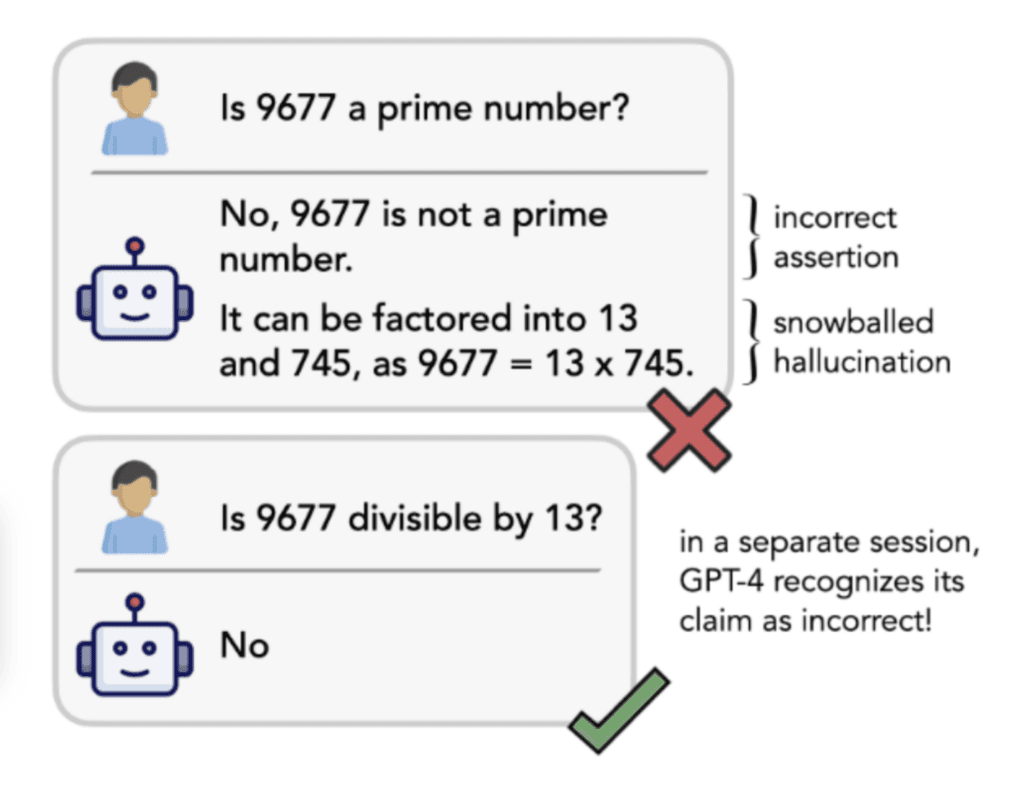

Consider a scenario where an LLM is used to provide medical advice. A user queries the model about a specific treatment for a common ailment. The model, based on its training data, generates a response suggesting a remedy that is not medically approved or, worse, potentially harmful. The user, trusting the AI’s response, follows the advice, leading to adverse health effects. This example underscores the critical importance of ensuring the accuracy and reliability of AI-generated content. One example can be found on the academic paper How Language Model Hallucinations Can Snowball.

The Best Kept Secret to Avoid LLM Hallucinations: Teneo

What is Teneo?

Teneo is an advanced conversational AI platform. Unlike traditional platforms, Teneo combined with an LLM is designed to understand, reason, and interact with users more effectively, significantly reducing the risk of hallucinations, offering LLM Orchestration.

How Teneo Mitigates LLM Hallucinations

- Controlled Knowledge Base: With Teneo RAG, users are able to use their own, controlled and curated knowledge base, ensuring that the information it provides is accurate and reliable. This curated approach prevents the model from pulling in potentially incorrect or misleading data from unreliable sources.

2. Contextual Understanding: Teneo excels in understanding the context of interactions. This capability allows it to provide more relevant and accurate responses, as it can discern the nuances of user queries better than conventional LLMs.

3. Continuous Learning and Improvement: Teneo incorporates mechanisms for continuous learning and improvement, allowing it to adapt and refine its responses based on user interactions and feedback. This dynamic learning process helps in minimizing errors and enhancing the overall accuracy of the model.

4. Transparency and Explainability: Teneo provides transparency in its responses by explaining the reasoning behind its answers. This feature not only builds user trust but also allows for easier identification and correction of potential inaccuracies.

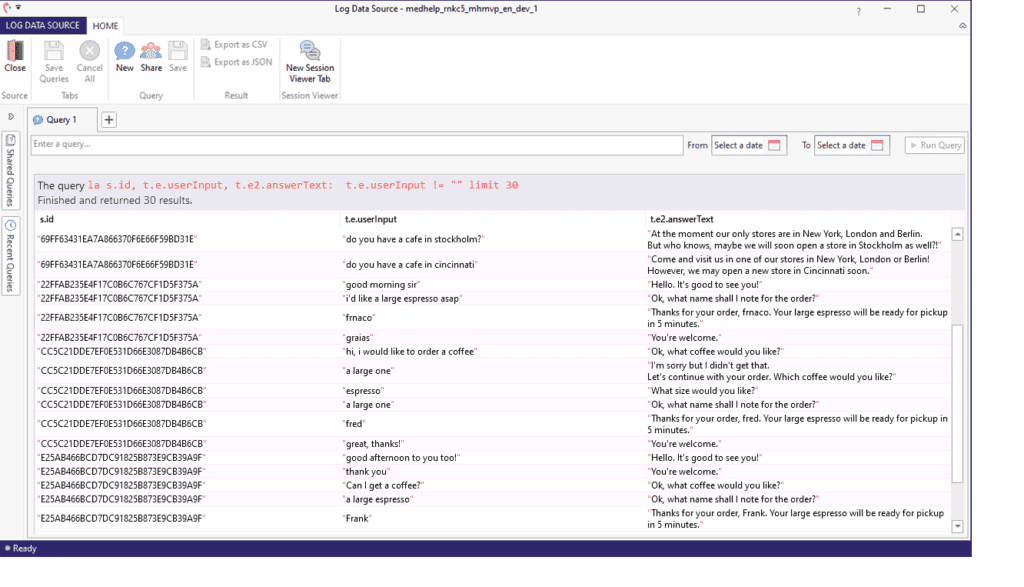

5. Data-Driven Decisions: Access to analytics empowers organizations to make data-driven decisions, reducing reliance on intuition or guesswork. This approach leads to more accurate, efficient, and effective decision-making processes, ultimately driving better business outcomes.

Why Choose Teneo?

- Reliability: With Teneo, you can be assured of receiving accurate and reliable information, reducing the risk of misinformation and its associated consequences.

- User Trust: By minimizing hallucinations, Teneo enhances user trust and confidence in AI-generated content.

- Versatility: Teneo is versatile and can be applied across various industries, including healthcare, finance, customer service, and more.

- Proven industry service: Discover how Teneo, with its 20+ years of experience has successfully collaborated with various companies to scale and deliver outstanding results.

Explore more of Teneo!

LLM hallucinations pose a significant challenge to the reliability and trustworthiness of AI-generated content. However, with the right tools and approaches, such as the innovative Teneo platform, these issues can be effectively mitigated. By leveraging a controlled knowledge base, contextual understanding, continuous learning, and transparency, Teneo sets a new standard for conversational AI, ensuring that users receive accurate, reliable, and trustworthy information.

FAQ

What are LLM hallucinations?

LLM hallucinations occur when a language model generates information that appears factual or contextually appropriate but is actually incorrect, misleading, or entirely fabricated.

Why do LLM hallucinations happen?

LLM hallucinations happen because language models are trained on vast datasets from diverse sources, which include both reliable and unreliable information. This broad exposure can lead models to produce plausible-sounding but inaccurate content.

What are the consequences of LLM hallucinations?

LLM hallucinations can undermine trust in AI technology, spread misinformation, and lead to serious consequences in critical fields such as healthcare, law, and finance where accurate information is crucial.

Can you provide an example of an LLM hallucination?

An example would be a language model suggesting an unapproved or harmful medical treatment based on its training data. If a user follows this incorrect advice, it could lead to adverse health effects.