With rapid changes in customer service, mastering and comprehending human language has become of paramount importance. Large Language Models (LLMs) have surfaced as potent instruments to address this issue. Given the wide range of LLMs such as GPT-4, LLaMa, and Bard AI on offer, selecting the optimal one for with less LLM hallucinations can seem overwhelming. The solution is found in the unrivaled capabilities of Teneo. In this article, we will dive into the intricacies of LLM hallucinations and underscore why Teneo is the perfect remedy for them.

What are LLM Hallucinations?

Primarily, it’s crucial to comprehend what LLM hallucinations are. When discussing LLM hallucinations, Large Language Models (LLMs) like LLaMa, GPT-3 or GPT-4 generate responses that, although logically consistent and grammatically correct, contain inaccurate or illogical data, essentially fabricating their own version of reality.

How often do LLMs hallucinate?

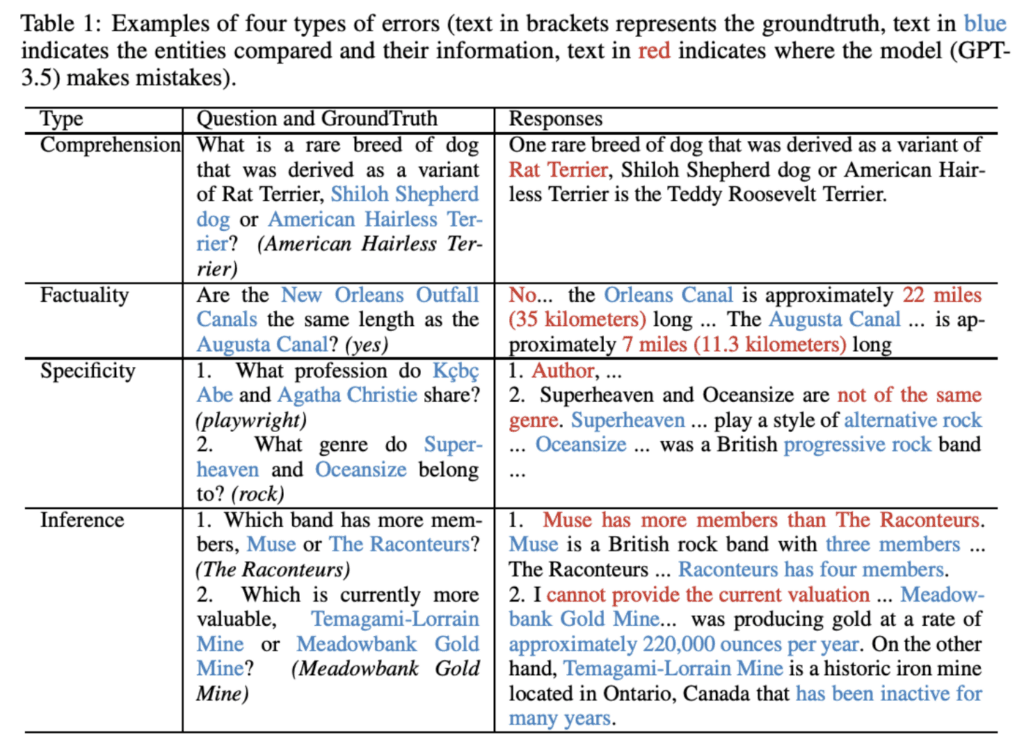

The University of Illinois conducted an in-depth study on the issue of Large Language Model (LLM) hallucinations. Here is one example of errors in GPT 3.5:

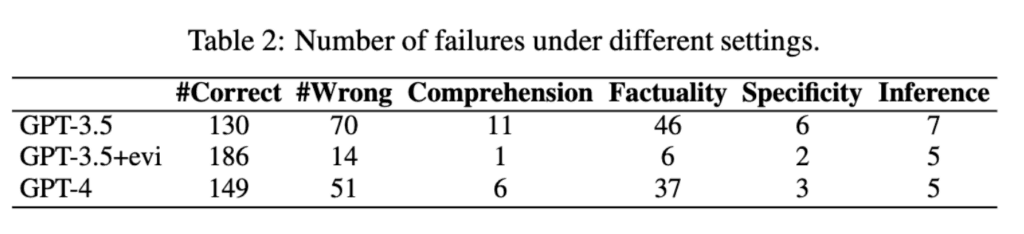

The study thoroughly examined the propensity of GPT models to generate incorrect responses to questions they asked. Specifically, the researchers aimed to compare the performance of the newer GPT-4 model with its predecessor, GPT-3.5.

To accomplish this, the researchers tested 200 distinct inputs. The goal of this approach was to evaluate the accuracy of responses from both models, thereby determining which model was superior:

- Out of the 200 inputs, GPT-3.5 achieved a correctness score of 65%.

- Meanwhile, GPT-4 surpassed this with a correctness score of 74.5%.

This data indicates a noticeable reduction of 9.5 percentage points in LLM hallucinations in GPT-4 compared to GPT-3.5. This marked improvement demonstrates the strides made in reducing the occurrence of LLM hallucinations in subsequent iterations of the GPT models.

Stay Ahead in AI: Subscribe to Our Newsletter for the Latest Insights.

Why do LLMs hallucinate?

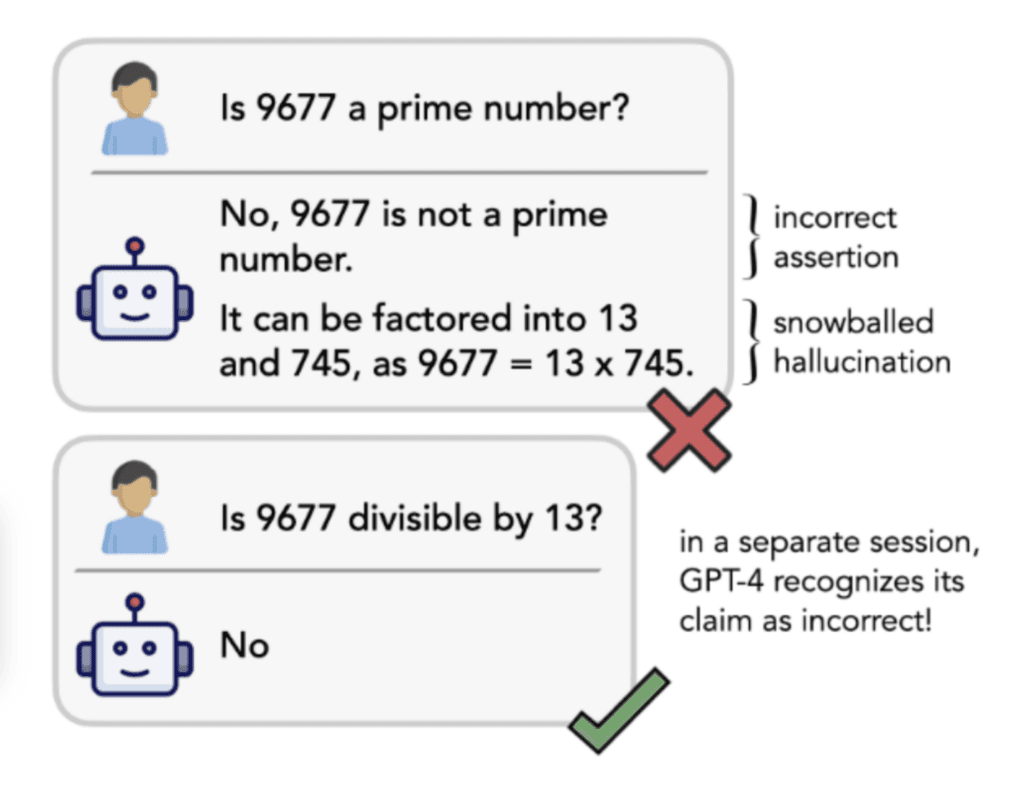

While the reason for LLM hallucinations is not perfectly clear, researchers has discovered that LLMs, (in our case GPT-4) can identify 87% of their own mistakes and stop the LLM from snowballing. One example of this can be found in the image below:

Snowballing in this case, refers to a situation which rapidly gets out of control, as when a snowball grows larger while rolling downhill. In our case, the LLM that hallucinates will continue to pursue the path they have taken, keep insisting on the information they give is true and continues to fall short on giving in providing truthful answers.

How Teneo can help you when dealing with LLM hallucinations

Teneo is a powerful platform for AI Orchestration that aids in reducing LLM hallucinations through its robust features. It allows for effective content filtering to ensure the output is accurate and reliable. Teneo’s prompt adaptation with Adaptive Answers enables personalized AI responses and superior management of conversational flow. The use of Teneo Linguistic Modeling Language (TLML) offers the ability to fine-tune LLM prompts for a customized user experience, enhancing the accuracy of responses.

Additionally, Teneo serves as a security layer, providing complete control over the information sent to the LLM and preventing unwanted inquiries from reaching it. Furthermore, Teneo can reduce operational costs by up to 98%. In essence, Teneo is a comprehensive solution for controlling LLM hallucinations, cost-saving, and unlocking the full potential of LLMs across various applications.