Achieving an optimal balance between efficiency, accuracy, and cost-effectiveness is critical in the dynamic and evolving landscape of conversational AI. The emergence of large language models (LLMs) such as GPT-4o has introduced new opportunities to transform enterprise virtual agent development. At Teneo, we have harnessed this potential to create a sophisticated hybrid solution that seamlessly combines the generative capabilities of LLMs with our advanced intent engine. This integration delivers unparalleled accuracy, flexibility, and cost-effectiveness for enterprise virtual agents, setting a new standard in the industry. Let’s discover a Hybrid LLM Chat Experience with Teneo.

Introducing Teneo’s Hybrid NLU: A New Era in Chatbot Development

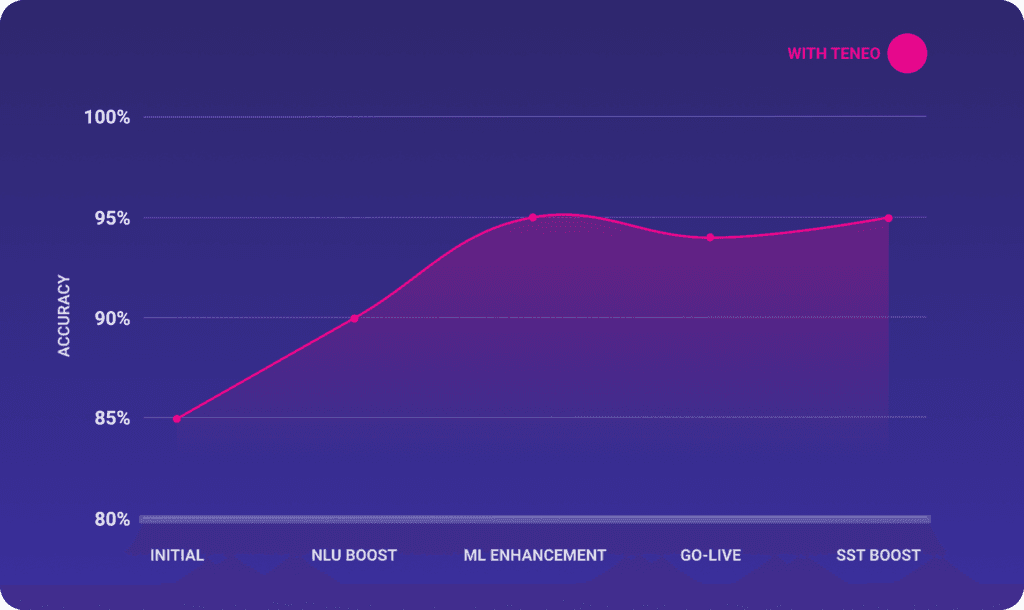

Teneo’s Hybrid NLU (Natural Language Understanding) represents a significant leap forward in chatbot technology. By seamlessly integrating the generative capabilities of LLMs with Teneo’s state-of-the-art intent engine, we’ve created a system that maximizes the strengths of both technologies for a Hybrid LLM Chat Experience with Teneo. This innovative approach begins with our intent engine attempting to identify the user’s intent. If it fails to provide a suitable response, the LLM steps in, ensuring high accuracy of 99% and customization while keeping costs in check.

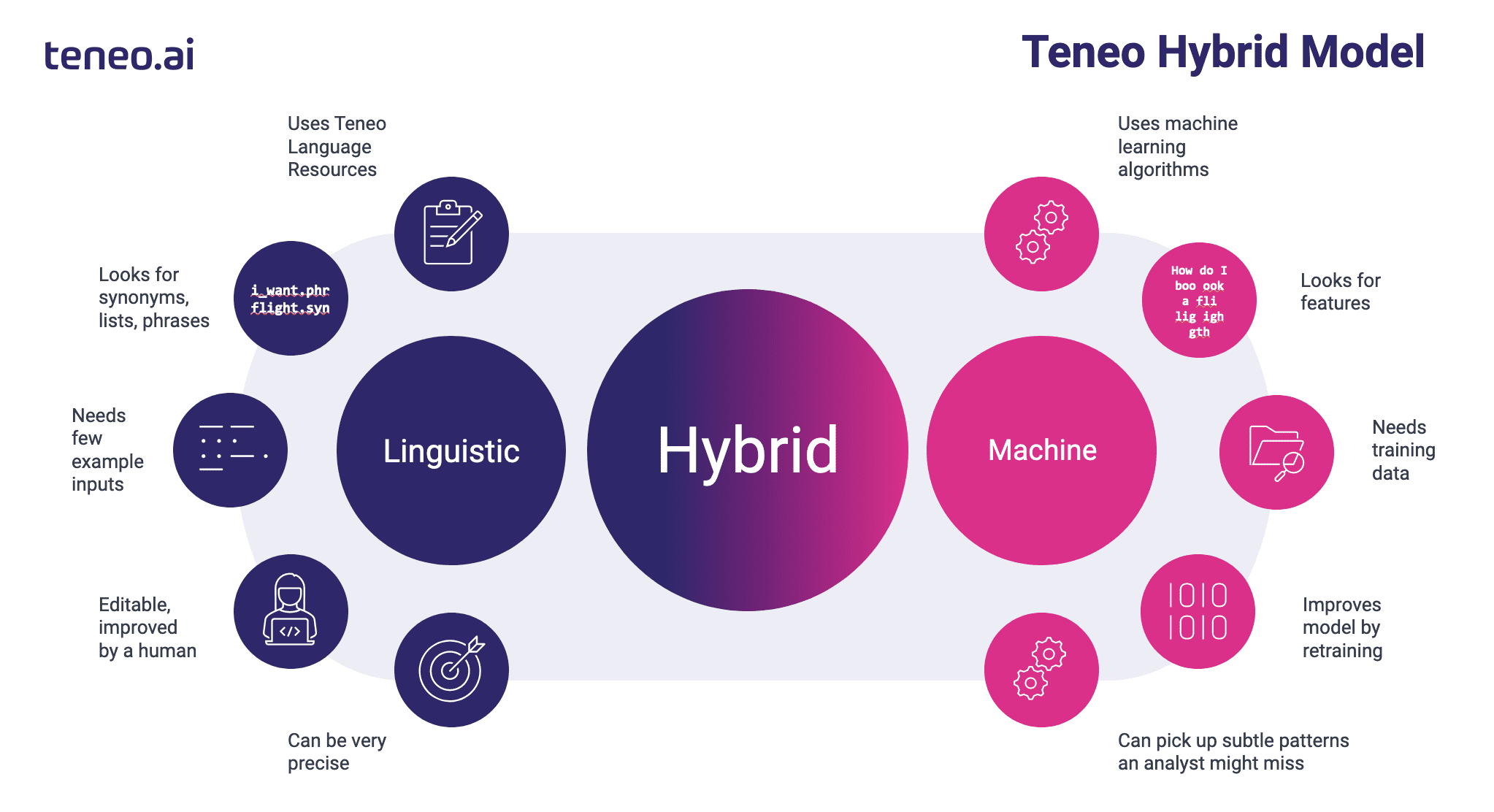

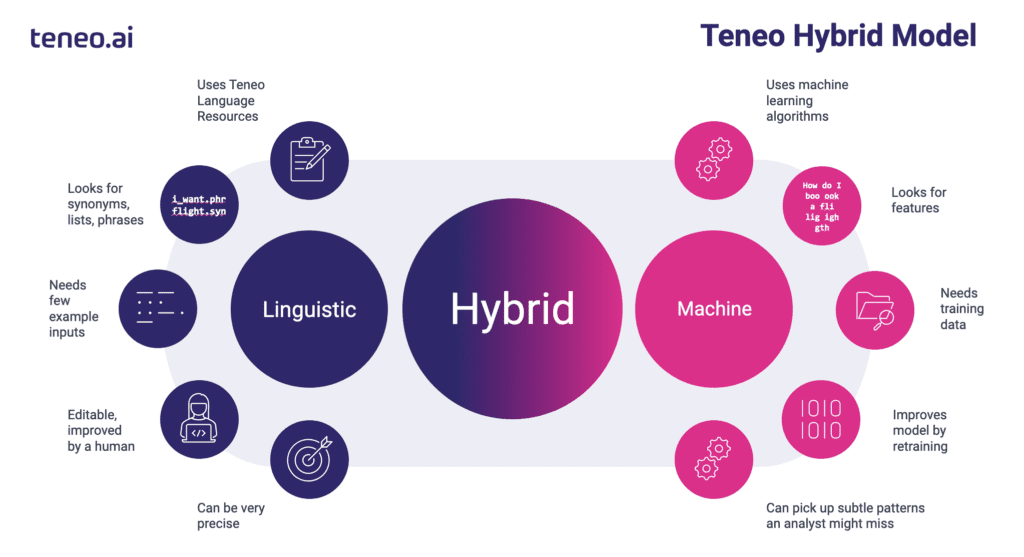

LLMs vs. Teneo’s Intent Models: A Balanced Approach

LLMs bring several advantages, such as generating responses without needing extensive training data, leading to quicker development cycles. However, they can be slower, costlier, hallucinate and sometimes less accurate compared to specialized intent models. Fine-tuning LLMs can also pose challenges when they don’t function as expected. See 5 biggest challenges with LLMs and how to solve them for more info. Despite these hurdles, LLMs excel in handling queries outside the trained scope of a virtual agent.

Teneo’s intent models, on the other hand, are highly scalable, accurate, and easily fine-tuned. They do require training data, which can slow down the development of larger models and limit their general understanding compared to LLMs trained on extensive datasets.

The Synergy of Hybrid NLU: Flexibility and Efficiency

One of the standout benefits of Teneo’s Hybrid NLU is the flexibility it offers to both new and existing customers. This system allows seamless integration without the need to choose between technologies, mitigating the risk of an incomplete solution. By utilizing both technologies in tandem, customers can leverage the unique strengths of each system to meet their specific needs. One example can be seen in the picture below where Teneo is integrated with Amazon Bedrock.

For instance, Teneo’s Hybrid NLU enables enterprises to capitalize on the rapid development and broad understanding provided by LLMs while also benefiting from the fine-tuning capabilities of our platform. This approach optimizes high-traffic areas, such as frequently asked questions, by employing the intent model. The result is faster response times and reduced costs due to fewer LLM requests.

Research and Experimentation: Pushing the Boundaries

Our extensive research and experimentation with challenging datasets have yielded impressive results. Published on a recent whitepaper, Teneo’s intent engine correctly identifies intents 99% of the time, while LLMs achieve around 79% accuracy. By combining these technologies, we surpass the performance of either model alone, reaching even higher accuracy levels.

Additionally, we explored the use of embeddings, which simplify machine learning tasks by converting large inputs into smaller numerical lists. Although initial performance did not meet our high standards, embeddings proved effective at narrowing down a large list of intents to a smaller, more manageable set. This approach can improve the overall cost efficiency of the LLM component.

Minimizing false intents by integrating Search

A key strength of Teneo’s Hybrid NLU is its ability to minimize false positives, enhancing the overall user experience. For example, if a user says, “I want to refund my ticket,” our intent engine effectively guides the model to the correct response by linking it to a ‘refund request’ intent rather than a ‘ticket cancellation’ intent. Conversely, if a user states, “I need to reschedule my flight,” an LLM-powered bot with broader understanding can better connect the query to the correct intent. For phrases like these, Teneo can work for LLM Orchestration, and has its own library you can make use of, which covers synonyms and different ways of saying a specific phrase.

Furthermore, Teneo offers an additional layer to our hybrid solution that doesn’t rely on intents. By analyzing a website, knowledge base, or other sources with Teneo, we combine search technology and LLMs to locate the correct information. This zero-shot method excels in rapidly addressing a broad spectrum of information, making it ideal for managing unstructured queries.

Empowering the Future of Conversational AI

Teneo’s Hybrid NLU combines the best of both worlds: the rapid development and broad understanding of LLMs with the precise, fine-tuned accuracy of our intent models. This synergy ensures that virtual agents built on our platform can be seamlessly upgraded with generative AI, enhancing accuracy and filling knowledge gaps.

By integrating LLMs with our advanced intent engine, Teneo provides a robust, flexible, and cost-effective solution for enterprise virtual agents, ensuring they perform optimally and meet the unique needs of our customers. Embrace the future of conversational AI with Teneo’s Hybrid NLU, and experience the next level of virtual agent performance and efficiency.