What is Large Language Model?

Large Language Models (LLMs) are advanced deep learning algorithms designed for a variety of Natural Language Processing (NLP) tasks. They utilize techniques like Latent Semantic Indexing (LSI) Leveraging transformer models to understand and generate text. Trained on extensive datasets, they can recognize, translate, predict, and generate text and other content. Functioning like neural networks, LLMs use interconnected nodes and layers to mimic the human brain’s processing. They are integral to AI applications such as chatbots and voice assistants.

Large Language Models vs Generative AI

While generative AI encompasses AI models creating various content types, LLMs, a subset of generative AI, specialize in text-based generation and interpretation.

How Large Language Models Work

LLMs operate in two main stages:

- Training: Using large textual datasets for unsupervised learning.

- Fine-Tuning: Optimizing performance for specific tasks like translation.

Key Components of Large Language Models

LLMs are comprised of:

- Embedding Layer: Transforms input text into meaningful embeddings.

- Feedforward Layer (FFN): Processes input embeddings for higher-level understanding.

- Recurrent Layer: Analyzes word sequences to capture sentence relationships.

- Attention Mechanism: Focuses on relevant parts of the input for accurate output generation.

Large Language Models Use Cases

LLMs are versatile in:

- Information Retrieval: Powering search engines for responsive queries.

- Sentiment Analysis: Analyzing textual data for sentiment insights.

- Text and Code Generation: Assisting in creative writing and software development.

- Conversational AI: Enhancing customer engagement through chatbots and voicebots.

Choosing the right LLM can be challenging, here is an overview of the most popular LLMs and their strengths.

Subscribe & Be in the know of LLMs!

Examples of Popular Large Language Models

The space of LLMs is constantly expanding, with new LLMs created almost every week. As of currently, notable LLMs include Google’s Gemini, X’s Grok, Meta’s LLaMa, Anthropic’s Claude and the OpenAI’s GPT series, each with unique capabilities and functionalities. See here for a comparison between these LLM-models.

Benefits and Challenges of Large Language Models

LLMs offer wide application potential and continuous improvement, learning rapidly through few-shot and zero-shot learning.

However, LLMs face challenges like hallucinations, security risks, bias, costs and issues related to consent and copyright. Machine learning (ML) and large language model (LLM) workloads are also very expensive to run in the cloud as they require significant amounts of computing power, memory, and storage. But there are ways to reduce your cloud costs for ML/LLM workloads without sacrificing scalability or reliability, which we have expanded on here.

Teneo’s platform offers robust solutions to these challenges, equipping businesses with the tools for a more secure, unbiased, and cost-efficient application of LLMs.

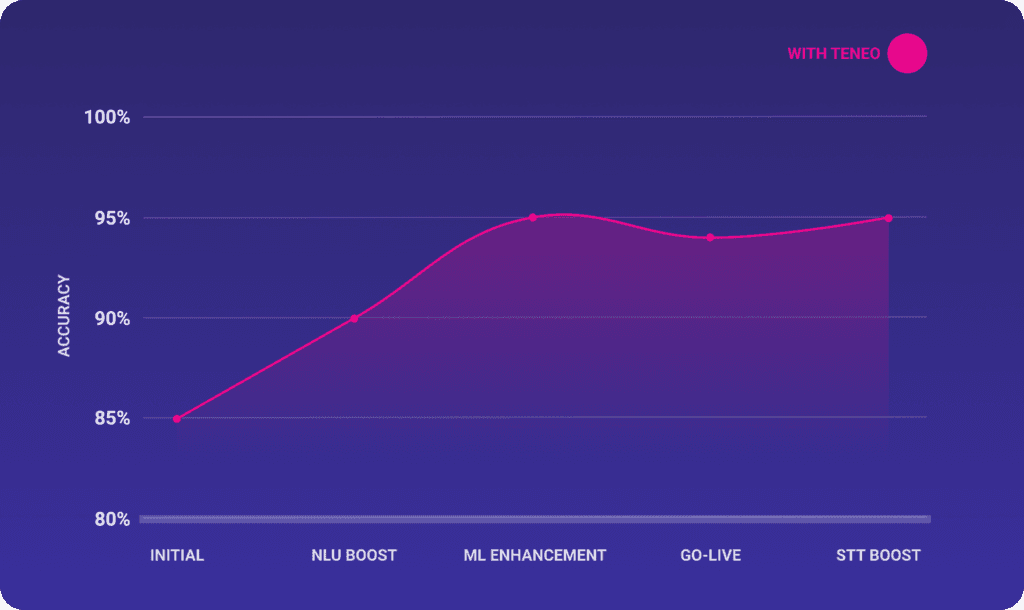

Seeking LLM precision without the price tag? Learn how Teneo’s NLU Accuracy Booster can elevate your AI’s efficiency while cutting costs.

Transformer Model Explained

At the heart of LLMs is the transformer model, consisting of an encoder and a decoder. It tokenizes input data and employs mathematical operations to discover relationships between tokens, enabling pattern recognition like human language understanding. Self-attention mechanisms in transformer models allow for faster learning compared to traditional models.

Applying Transformers to Search Applications

LLMs enhance search applications through:

- Generic Models: For predicting next words in information retrieval.

- Instruction-Tuned Models: Aiding in tasks like sentiment analysis.

- Dialog-Tuned Models: Facilitating conversational AI and chatbots.

Explore how Generative AI Orchestration brings these capabilities to life in search and customer service, providing real-time, tailored customer interactions that drive customer satisfaction and efficiency.

FrugalGPT: Cost-Effective Large Language Model Usage

FrugalGPT is an innovative approach for using LLMs more cost-effectively and efficiently. It focuses on strategies like prompt adaptation, LLM approximation, and LLM cascade to reduce costs and maintain or enhance accuracy. FrugalGPT has shown to save up to 98% of costs while matching or improving performance compared to individual LLM APIs.

Strategies in FrugalGPT

- Prompt Adaptation: Reducing prompt size to decrease costs.

- LLM Approximation: Using affordable models to mimic expensive LLMs.

- LLM Cascade: Sequentially using different LLM APIs based on cost and accuracy needs.

FrugalGPT’s testing demonstrated significant cost savings and flexibility in performance-cost trade-offs, making it a valuable tool for large-scale LLM applications. Explore 3 ways how to reduce LLM costs here.

Introduction to Teneo´s AI Orchestration of Large Language Models

Teneo’s advanced Generative AI Orchestration streamlines your contact center’s technology, harmonizing Generative AI and data systems for peak efficiency and smoother customer journeys.

Teneo is compatible with every LLM and helps you:

- Save 98% in Generative AI Operation Expenses (OpEx).

- Elevate service precision to an astounding 99%.

- Prevent Prompt Hacking/Injections.

- Effectively analyze and monitor your LLM.

Future Advancements in Large Language Models

The future of LLMs is poised for transformative changes in the job market and raises ethical considerations for their use in society. For example, LLMs could be useful to automate tasks like customer service, content creation, and even legal research. This could free up time for human workers to focus on higher-level tasks and improve overall productivity.

However, the use of LLMs also raises ethical considerations. For instance, there are concerns about the potential for LLMs to propagate bias and misinformation in the form of LLM hallucinations. Additionally, the training for LLM are also a topic currently being questioned as it makes use of copyrighted and trademarked material without being transparent. Something that leads to unfair use of content, and damage to the content owner.

Introduction to Teneo´s AI Orchestration of Large Language Models

Teneo’s advanced Generative AI Orchestration streamlines your contact center’s technology, harmonizing Generative AI and data systems for peak efficiency and smoother customer journeys.

Teneo is compatible with every LLM and helps you:

- Save 98% in Generative AI Operation Expenses (OpEx).

- Elevate service precision to an astounding 99%.

- Prevent Prompt Hacking/Injections

- Effectively analyze and monitor your LLM

Contact us for a demo on Gen AI orchestration!