Generative AI is a groundbreaking technology, driving innovation across various industries, however, the integration of such advanced technologies such as GPT often comes with significant operational expenses (OpEx), posing a substantial challenge for many businesses:

AI cost for training your own LLM-model

To train a custom model based on GPT-4 takes several months and the cost associated starts at $2 to $3 million US dollars.

Expensive to run LLMs like GPT-4

The expenses associated with running large language models (LLMs) like GPT-4 are substantial, primarily due to the computational resources required. This high cost of operation limits the scalability and accessibility of AI technologies, especially for smaller enterprises or those with limited budgets. GPT-4 can cost a small business over $252.000 a year in customer service costs.

The Complexity of Generative AI Integration

Another challenge lies in the complexity of integrating these advanced AI models into existing systems. Companies like Google have developed sophisticated AI solutions, but integrating these into a business’s unique ecosystem often requires extensive customization, further escalating costs and technical demands.

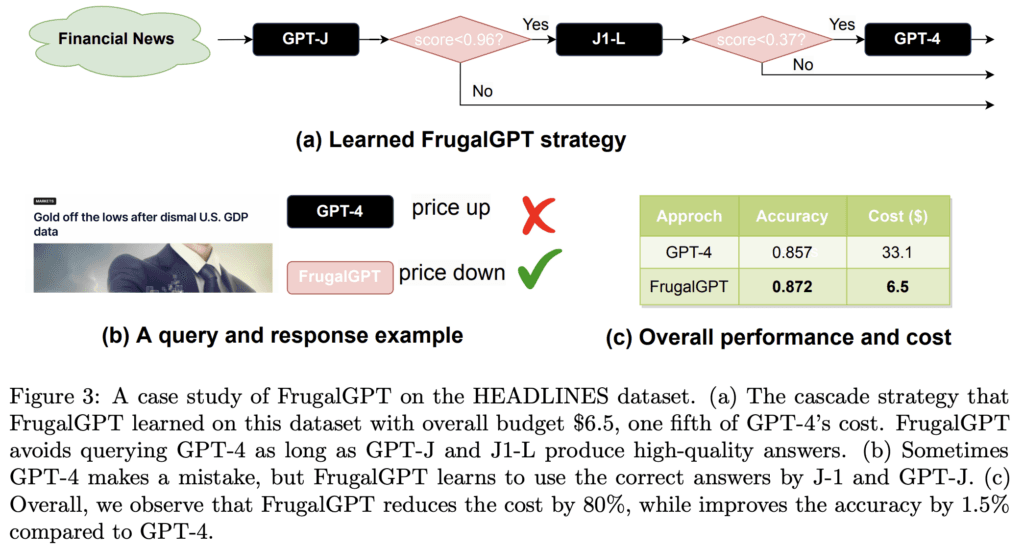

The research paper titled “FrugalGPT: How to Use Large Language Models While Reducing Cost and Improving Performance” proposes a solution to the cost problem with LLMs. The paper introduces FrugalGPT, a methodology which teaches us the combinations of LLMs to use to reduce cost and improve accuracy.

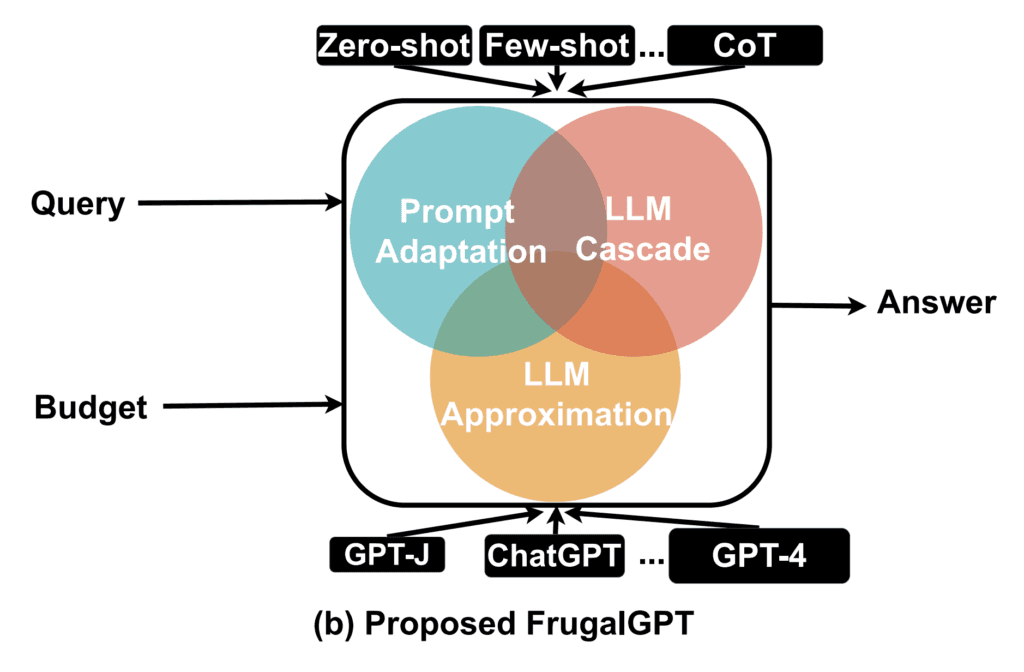

Proposed FrugalGPT Architecture

The paper shows that FrugalGPT methodology matches the performance of the best LLM with up to 98% cost reduction and improves the accuracy over by 4% – for the same cost.

FrugalGPT – Reducing Costs and Improving Performance

The FrugalGPT paper proposes a clear strategy on how to reduce the cost of using LLMs. For non-technical users, these strategies can be described as following:

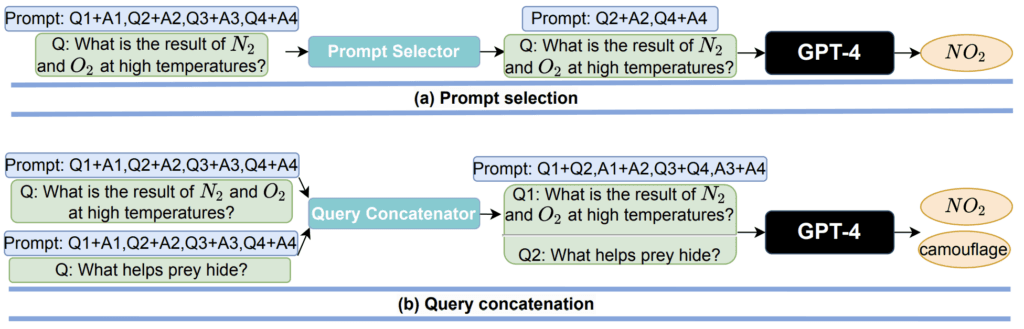

1. Prompt Adaptation:

How to identify and use real effective, often shorter, prompts to save in cost. What this means is how to craft your queries to an LLM to be as concise as possible. For example, how to get a question down to its most essential parts. This saves on costs as you’re using less tokens, and fewer resources (API´s) of the LLM.

Two Prompt Adaptation techniques:

Prompt Selection – uses a subset of in-context examples of the prompt to reduce the size of the prompt. Query Concatenation aggregates multiple queries to share the prompts.

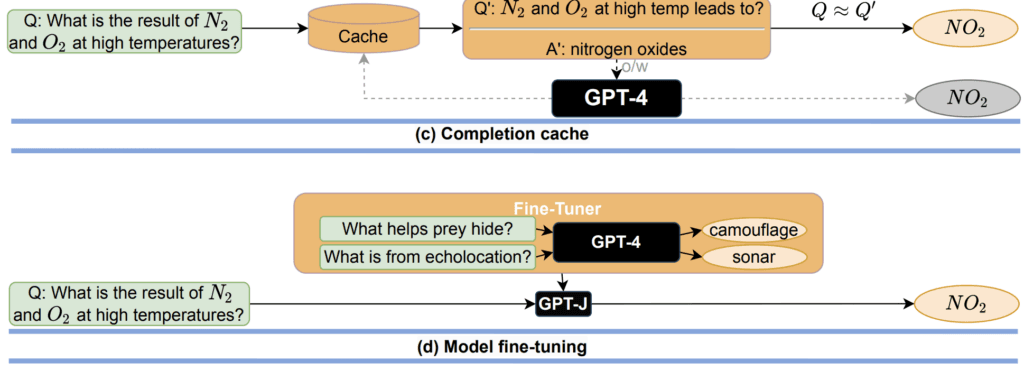

2. LLM Approximation:

This tactic aims to create cheaper and simpler LLMs to match the performance of a more powerful (and more expensive) model on specific tasks. In theory -how to use a less expensive model that has been shown to perform well on the type of task you’re interested in. With LLM Approximation you’re not paying for extra capabilities that you don’t need.

Two LLM Approximation techniques:

Completion Cache – it stores and reuses an LLM API’s response when a similar query is asked, and Model Fine-Tuning uses the more expensive LLMs’ responses to fine-tune your cheaper LLMs.

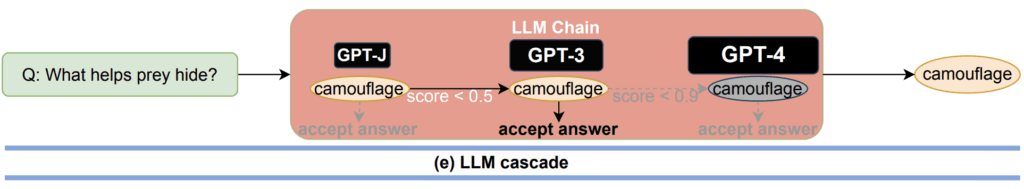

3. LLM Cascade:

How to adaptively choose which LLM to use for different queries. This means using a less expensive model for simpler queries and reserving the more expensive models for more complex queries. I.e. you’re only paying for the more expensive LLMs when you really need them.

The LLM Challenge Cost vs AI Accuracy

The balance between cost and accuracy is a critical factor in decision-making, especially when it comes to the adoption of new LLMs. FrugalGPT, with its promise of maintaining high accuracy while significantly reducing costs, presents a very compelling proposition.

Recognizing the challenges of high operational expenses and complex Generative AI integration, we at Teneo.ai address these issues head-on and we have incorporated FrugalGPT´s approach to Generative AI orchestration, offering a platform in which you can automatically choose the most beneficial deployment of any LLM in any instance. This significantly reduces operational expenses by 98% while enhancing efficiency and customization. This dramatic decrease in expenses opens the door for a wider range of companies to harness the power of AI without the burden of prohibitive costs.

This flexibility also ensures that companies can choose the AI solution that best fits their operational needs and budget, without being locked into a single provider or facing exorbitant integration costs.

Balancing Cost-Effectiveness with High AI Accuracy

While Teneo.ai significantly reduces OpEx, it equally prioritizes maintaining high accuracy in its AI solutions. The opportunity to combine the power of LLMs, with Teneo Linguistic Modeling Language (TLML) increases accuracy while reducing costs. This balance ensures that businesses do not have to compromise on the quality of AI-driven interactions, even as they enjoy reduced costs. Teneo.ai’s approach, which includes advanced technologies like the Teneo Accuracy Booster, guarantees that precision is not sacrificed for affordability, no matter which LLM you choose to use.

A New Era of AI Efficiency with Teneo.ai

In conclusion, Teneo.ai presents a groundbreaking solution to the challenges of high operational expenses in generative AI. By employing strategies from the FrugalGPT methodology, Teneo.ai not only significantly reduces costs but also maintains high accuracy, ensuring quality AI interactions. This innovative approach opens doors for a wider range of businesses to harness the power of AI with Teneo, democratizing advanced AI technologies and leveling the playing field in the digital era.

Explore the Future of AI with Teneo.ai

Ready to transform your business with cost-effective and accurate AI solutions? Discover how Teneo.ai can revolutionize your AI strategy.

Sign up for a free guided demo today and experience the future of AI-enhanced customer service with Teneo – where innovation meets efficiency and accuracy.