OpenAI has released two powerful new language models: o3 and o4 mini. These models are designed to think before they respond, reason through complex interactions, and combine multiple capabilities within a single task. But what does this mean for contact centers and enterprise conversational AI?

If you’re managing digital assistants or AI agents in customer support environments, these models unlock a level of intelligence and flexibility that was previously out of reach. Here are five compelling reasons to start evaluating o3 and o4 mini now, especially if you’re building on an Agentic AI platform like Teneo.

1. Real Multi-Turn Reasoning That Improves Customer Resolution

The o-series models, especially OpenAI o3 have been trained to handle longer, more complex conversations. They can evaluate past context, understand layered instructions, and follow through across multiple turns, making them ideal for AI agents that need to support customers through detailed or multi-step requests.

Whether it’s troubleshooting, billing disputes, or onboarding journeys, o3 and o4 mini offer significantly stronger comprehension and accuracy. In benchmark tests, o3 reduced critical task errors by over 20 percent compared to earlier OpenAI models, like o1. That means fewer fallbacks to human agents and more customers getting what they need faster.

2. Integrated Tool Use Means Smarter, Self-Sufficient Agents

One of the biggest advances with o3 and o4 mini is their ability to decide when and how to use tools such as search or file analysis without manual prompting. This unlocks a new level of autonomy.

Imagine a virtual agent that can:

- Retrieve up-to-date return policies directly from your website

- Understand customer-submitted screenshots or product photos

- Summarize internal documentation for an agent in real time

Instead of relying on rigid scripts or API calls defined in advance, OpenAI o3 and o4-mini reasons about which tools to use to complete the task. This enables more dynamic, helpful, and human-like conversations.

3. Visual Understanding Expands the Scope of Support

Customers often send images instead of describing with words. A photo of a receipt. A screenshot of an error message. A picture of a damaged product.

The o3 and o4 mini models are built to interpret those inputs, not just describe them, but reason through them. That means your conversational AI can now support image-based queries with confidence, enabling:

- Faster warranty claim processing

- Automated equipment diagnostics

- Smarter ticket triaging based on visual evidence

For contact centers aiming for omnichannel support which is available with Teneo and Genesys Cloud, this is a significant leap forward.

4. Smarter Scaling with o4 Mini’s Efficiency

Not every conversation requires maximum horsepower. That’s where o4 mini shines. It delivers strong reasoning capabilities and visual understanding at a much lower cost than o3, making it ideal for high-volume, low-latency use cases like:

- Tier 1 support

- FAQ automation through concepts like Teneo RAG

- Pre-qualification or routing assistants

Because o4 mini outperforms older models in key areas like math, data reasoning, and instruction following, you get better answers without increasing spend. And if a more complex case comes in? Teneo makes it easy to escalate to a more powerful model like o3 with its own LLM Orchestration.

5. Seamless Integration with Teneo for Enterprise-Ready AI

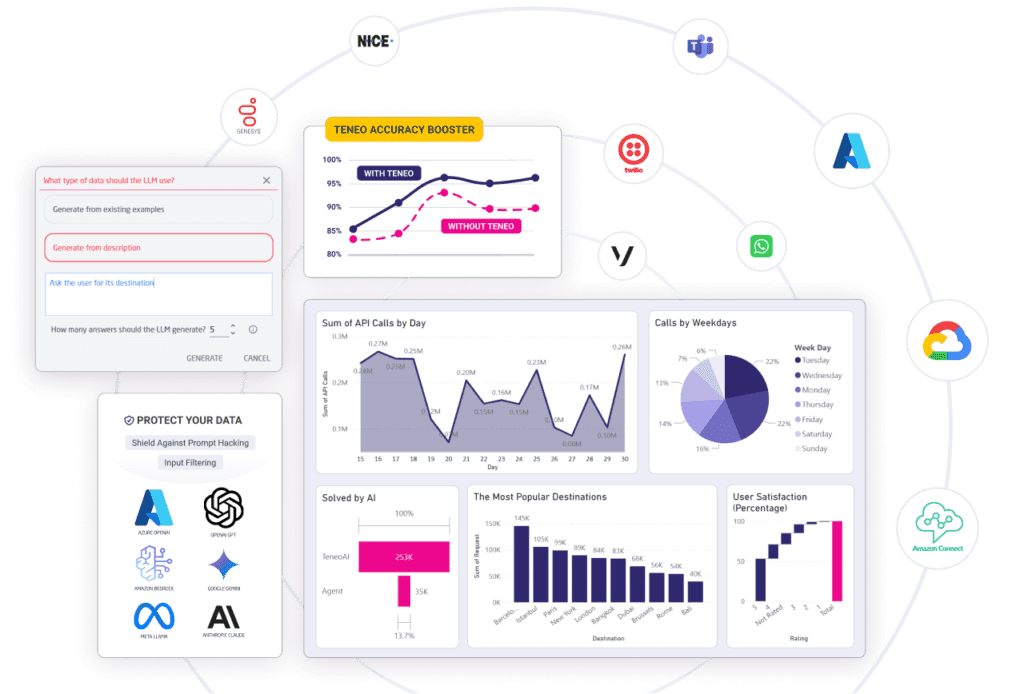

Teneo already gives you full control over how and when models are used. With the release of o3 and o4 mini, you can now route conversations intelligently based on complexity, channel, or business rules.

Want to handle visual queries with o3, but default to o4 mini for account lookups? No problem. Teneo lets you:

- Orchestrate between multiple LLMs with AI Agents.

- Make use of Stanford University FrugalGPT so save up to 98% of your AI costs.

- Insert validation, security compliance, and fallback logic

- Protect you against Prompt hacking/injection attempts

- Track outcomes and performance with detailed analytics

You maintain control of the conversation strategy. The models simply give you more power to execute it.

Final Thoughts

OpenAI’s o3 and o4 mini models raise the bar for what’s possible in enterprise-grade conversational AI. Whether you’re building agents that support live reps or fully automated experiences, these models are equipped to handle today’s complexity, and tomorrow’s expectations.

By integrating them into Teneo, you gain the flexibility to deploy the right intelligence for every use case, without compromising on control, speed, or scale. Contact us to learn more!

FAQ

What’s the difference between o3 and o4 mini?

o3 is more powerful and excels at multi-step, high-complexity tasks. o4 mini offers excellent reasoning at lower cost, making it ideal for high-volume interactions.

Can these models understand and process images?

Yes. Both models can analyze images and use that input to drive decisions—perfect for receipts, product photos, or error screenshots.

Do I need to retrain my flows to use these models in Teneo?

No. Teneo supports plug-and-play integration with LLMs like o3 and o4 mini, so you can enhance existing AI Agents without a full rebuild.

What are common use cases in contact centers?

Customer support, claims automation, tech troubleshooting, onboarding, and more, all benefit from deeper reasoning and contextual memory.

How do I control costs while using multiple models?

Teneo lets you route queries based on complexity. Use o4 mini for standard queries and switch to o3 only when advanced capabilities are needed.