The movement towards adopting Retrieval-Augmented Generation (RAG) and Large Language Models (LLMs) such as OpenAI’s GPT and Google’s Gemini marks a pivotal shift in the technological enhancement in customer service operations and conversational automation. The advancements are not just fleeting trends but are setting new standards in how we automate customer service interactions and analyze vast datasets through conversational automation and virtual assistants.

The cost associated with implementing RAG and LLMs into your business strategy can be substantial, raising concerns about the financial feasibility of such projects. However, the journey towards integrating advanced conversational automation and virtual assistant technologies doesn’t have to be prohibitively expensive. With the right strategy, it’s possible to keep the costs in check without skimping on quality.

This guide offers practical insights into managing the expenses of your LLM projects, ensuring that you can leverage the full potential of RAG and LLM technologies to enhance your operations without compromising quality or financial sustainability.

Virtual assistants and LLMs

Before diving into the deep end, take a step back and assess what you truly need from an LLM-powered virtual assistant. Start by pinpointing exactly what you need it for—be it simplifying customer service, conversational automation, automating process flows, efficiently managing calls, or setting up a basic FAQ system. Not every goal demands the most advanced or expensive LLM model. Often, a simpler model can meet your needs effectively and at a lower cost.

It’s crucial to match the complexity of the model with the specific tasks you aim to accomplish. Moreover, consider the future scalability and flexibility of the virtual assistant. Choose an AI orchestration platform that can adapt and grow with your business needs, avoiding the trap of starting with technology that cannot easily scale up and further match your requirements.

Optimize Your Data to Avoid LLM Hallucinations

Hallucinations are the Achilles heel of LLMs; it happens when the LLM invents the truth and makes something up. Two recent examples of LLM hallucinations causing issues can be found when a car dealership sold a Chevrolet for $1, and Air Canada giving wrong information about refundable tickets. Data is the lifeblood of LLMs, but more data doesn’t always mean better performance. It’s about quality and relevance. Before building a RAG, invest time in cleaning and optimizing your data. Every single webpage you use as part of your data will affect the performance of the assistant. Remove or update outdated information visible on your website. This can dramatically increase customer satisfaction, which in turn lowers costs and increases revenue.

Conversational AI, LLMs, and Accuracy

Accuracy in Conversational AI is not just about responding correctly; it’s the foundation of trust, efficiency, and customer satisfaction. In an era where immediate and reliable information is expected, the precision of virtual assistants directly influences a user’s experience and perception of a brand. Accurate responses ensure users feel understood and valued, minimizing frustrations and maximizing the effectiveness of automated interactions.

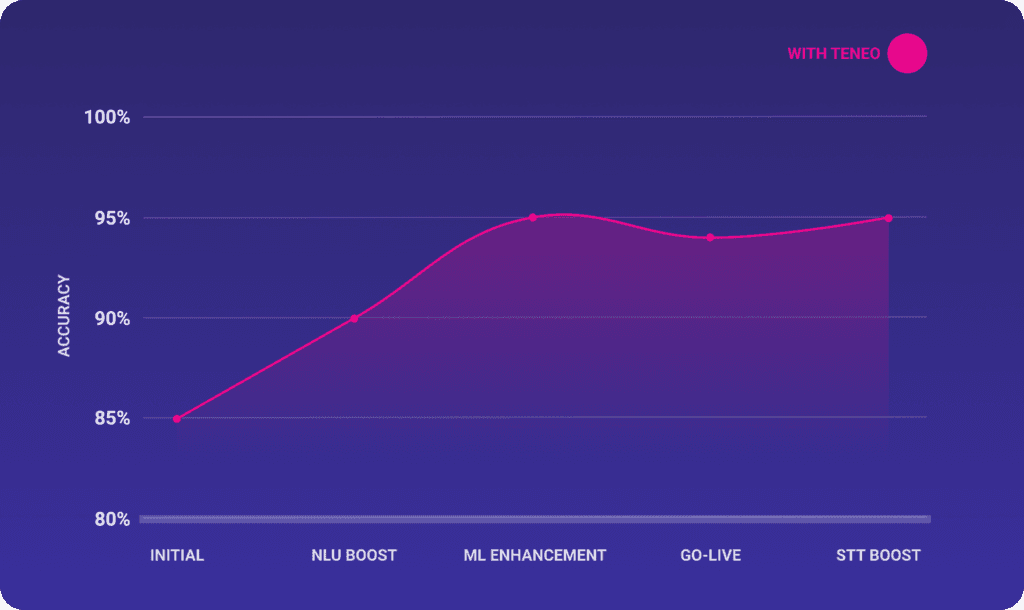

Teneo sets the benchmark with its Natural Language Understanding (NLU) engine, achieving an impressive end-to-end accuracy rate of 99% using the Teneo Accuracy Booster. This superior performance is powered by the Teneo Linguistic Modeling Language (TLML™), a proprietary technology that elevates intent detection and ensures robust responses, even in complex scenarios where traditional models might struggle.

With Teneo, accuracy isn’t just a feature; it’s a pathway to tangible benefits:

- Operational Efficiencies: Leveraging Teneo’s precision in NLU can dramatically reduce costs. For instance, improving NLU accuracy by 10% in a call center handling 1 million calls monthly can lead to savings of up to $500,000.

- Customer Satisfaction: Implementing solutions like Teneo has shown to decrease call handling times by 30% and operational costs by 20%, directly boosting customer satisfaction and fostering loyalty.

In short, Teneo not only meets the accuracy needs of today’s Conversational AI but sets a new standard, transforming accuracy into cost savings and enhanced customer experiences.

Teneo’s Conversational IVR can reduce call handling times by 30% and operational costs by 20%, showcasing the substantial impact of accuracy on the bottom line.

Building LLM Bots with Teneo

Teneo empowers businesses to harness the full potential of Large Language Models for RAG bots, providing a comprehensive platform that simplifies the creation, deployment, and management of these assistants across a spectrum of use cases—from basic customer service FAQs to complex, interactive process flows. By prioritizing cost-effectiveness, Teneo ensures that businesses can leverage the latest in AI technology without incurring prohibitive expenses, saving up to 98% of your LLM costs with FrugalGPT. It enhances user experiences through intelligent interaction design, employs advanced data optimization to eliminate the risk of misleading information or hallucinations, and offers robust monitoring and analytics capabilities through our latest solutions. These features are further augmented by seamless integration with Power BI, enabling organizations to generate detailed, customizable insights into user interactions, satisfaction metrics, and overall assistant performance. This holistic approach streamlines the development process and guarantees that digital assistants are both efficient and aligned with the evolving needs of businesses and their customers, ensuring long-term relevance and value in a competitive digital landscape.

Book a demo and get the benefits of LLMs with Teneo