In the rapidly evolving world of conversational AI, Large Language Models (LLMs) have emerged as potent tools, capable of performing a myriad of tasks in natural language processing. However, their true potential of LLM is unlocked through effective prompt engineering.

What is Prompt Engineering?

Prompt Engineering is the art and science of crafting instructions (or prompts) to guide LLMs in producing optimal outputs. This article dives into the nuances of prompt engineering, when working with LLMs, especially in the context of intelligent routing in a Contact Center using Teneo.

Why is Prompt Engineering with LLMs Crucial?

- Control Over Outputs: LLMs, though powerful, can sometimes produce unpredictable results. Prompt engineering ensures that the outputs align with the desired intent, especially in business scenarios where precision is paramount.

- Enhanced User Experience: A well-crafted prompt can significantly improve the user experience by ensuring that the LLM’s response is relevant, accurate, and contextually appropriate.

5 Key Considerations for Crafting Prompts

- Iterative Process: Crafting the perfect prompt often requires multiple iterations. It’s essential to experiment and refine based on feedback.

- Structure: A typical prompt should have an instruction, context, and a desired output. Additional parameters, like temperature, can be used to adjust the model’s creativity.

- Branding: For customer-facing outputs, align the responses with brand guidelines. For instance, instructing the model to use a specific tone or persona can ensure brand consistency.

- Specificity: LLMs perform best with clear and unambiguous instructions. Including keywords, fallback instructions, and context can enhance the quality of the output.

- Negative Prompts: Specifying what the LLM should avoid can be as important as instructing what it should do, especially to prevent inappropriate or offensive outputs.

Prompting with Teneo

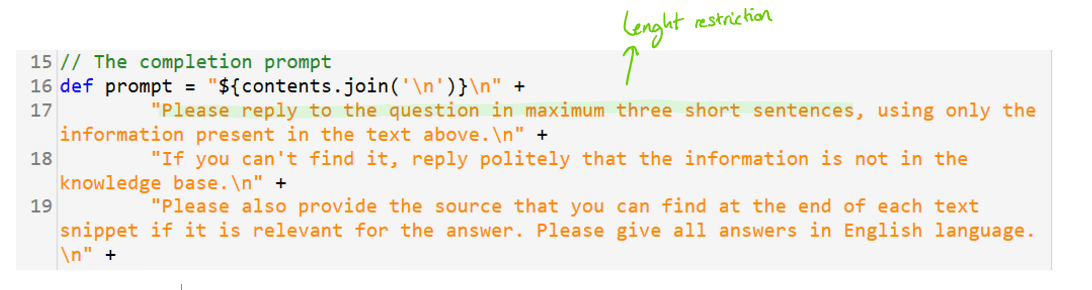

With Teneo Conversational IVR, prompts guide GPT models to condense dialogues and extensive user inputs. The dialogue summarization function encapsulates interactions between customers and the smart IVR, offering contact center representatives a richer context when the call is forwarded. The image beneath illustrates the summarization prompts employed in this solution, emphasizing that even the most concise prompts encompass the fundamental components mentioned earlier.

Prompt Displayed in Teneo for Condensing Conversations

Teneo also facilitates improved intent detection by condensing lengthy user inputs prior to their categorization by the NLU engine. The subsequent image presents the directive, which integrates a user persona and a distinct emphasis area.

One of the standout features of LLMs is their versatility in handling various tasks. Understandably, each task demands unique instructions, leading to distinct phrasing and wording structures.

Advanced LLM Prompting Techniques

- Chain-Of-Thought Prompting: Break down the main instruction into smaller tasks for better clarity.

- Few-Shot Prompting: Provide several input-output pairs in the prompt to enhance its performance.

- Cost Implications of Prompts: Most LLM providers charge based on tokens, which means the length of the prompt and the response affects the cost. It’s crucial to strike a balance between comprehensive prompts and cost efficiency.

Security Concerns: The Threat of Prompt Hacking

While LLMs offer immense benefits, they can also be exploited maliciously through techniques like prompt injection. It’s vital to be aware of such threats and implement countermeasures.

As LLMs become integral to the conversational AI ecosystem, prompt engineering is emerging as an essential skill. Whether it evolves into a specialized role or becomes a staple skill for AI developers remains to be seen. Until then, the journey of exploration and learning continues.