The integration of Large Language Models like GPT into Conversational AI solutions has opened up a world of possibilities. While the exploration of these benefits continues, it’s essential to address other critical aspects, such as latency. This article delves into the latency issue associated with GPT models provided by Microsoft on Azure via an API.

The Importance of Latency in GPT

In the realm of Conversational AI, every second counts. Especially in solutions like Teneo, which aims to facilitate natural, fluid conversations between humans and bots over the phone. In such scenarios, even a slight delay can disrupt the user experience.

Understanding the Baseline

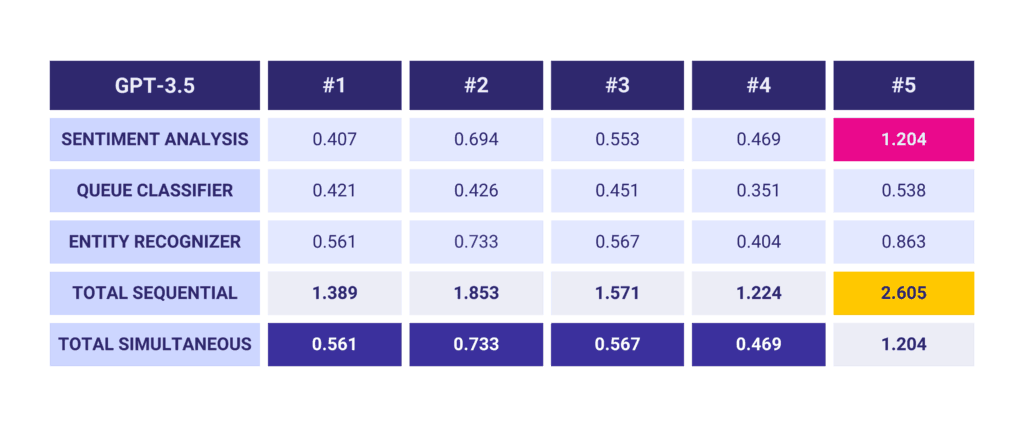

Typically, a call to the Azure OpenAI API takes between 0.2-0.8 seconds for interactions with a relatively small prompt (e. g. the task instructions plus a user input like ‘My phone is broken’ which is being analyzed. However, there can be outliers that take more than a second, potentially several seconds in the worst-case scenario. This latency can become problematic when multiple GPT calls are required within a single bot interaction, such as Sentiment Analysis, Queue Classification, and Entity Recognition.

Improving Latency in GPT with 60%

To tackle the challenges of accumulated latency in a sequential setup and outliers we have released an update to our GPT Connector for which improves multithreading for GPT:

When GPT API calls are run sequentially, the response times of your bot can be just satisfactory, as seen in test cases #1 to #4. However, when these calls are executed simultaneously, the total latency time is reduced to the duration of the longest single call.

To put it simply, by executing our GPT calls concurrently, we can decrease the total latency in our first example by 59.6%. Conversely, running the calls in a sequential setup can increase the latency by as much as 147.6%.

Learn how

- Multithreading

The recent update to our GPT Connector introduces multithreading for GPT calls, significantly reducing total latency. By running GPT calls simultaneously, we can cut down the total latency by a substantial margin.

- Handling Outliers

For outliers that take several seconds, it’s crucial to control the overall latency of your bot’s response. Depending on the importance of the task for the current interaction with the bot, you might want to customize the timeout settings for each instance of the GPT Helper used in your project.

- Customizing Timeouts

The importance of a specific GPT call for the current bot interaction and the resulting user experience should dictate the timeout settings. For instance, if the Sentiment Analysis takes longer than one second, we might want to prioritize low latency over the inclusion of this call.

Selecting the Right Region and GPT Model

Choosing an Azure region that aligns with your project’s deployment can help avoid additional latencies. Also, selecting the right GPT model that best fits your requirements is crucial. For instance, GPT-3.5 tends to have lower latencies than GPT-4 and is also much cheaper.

While the use of GPT models adds many new possibilities to Teneo projects, it’s vital to understand the pros and cons to truly add value to your Conversational AI solutions. By addressing latency issues, we can ensure a seamless and efficient user experience.

Ready to experience the power of optimized GPT in action? Don’t just take our word for it. Click here to schedule a demo and see how you can revolutionize your Conversational AI solutions.