Large Language Models (LLMs) have revolutionized the field of artificial intelligence (AI), providing unprecedented capabilities in natural language processing, understanding, and generation. However, effectively orchestrating LLMs to achieve desired outcomes requires careful planning and execution. In this article, we’ll explore common pitfalls in LLM orchestration and provide practical solutions to overcome them.

Understanding LLM Orchestration

LLM orchestration involves managing and coordinating multiple Large Language models to work together seamlessly. For example, combining Meta LLaMa, and Google Gemini with OpenAI GPT-4o. This approach is essential for tasks that require complex interactions, such as multi-turn conversations, context-aware responses, and integrating domain-specific knowledge. The goal is to enhance the efficiency, accuracy, and overall performance of AI applications.

Common Pitfalls in LLM Orchestration

1. Lack of Context Management

Pitfall: One of the biggest challenges in LLM orchestration is maintaining context across multiple interactions. Without proper context management, the models can produce disjointed and irrelevant responses.

Solution: Implement robust context management strategies. Use techniques like memory modules or context windows to keep track of previous interactions. Teneo, for example, excels in context management by preserving conversation context across sessions, using advanced AI, ensuring coherent and relevant responses.

2. High Computational Costs

Pitfall: Running multiple LLMs simultaneously can be computationally expensive, leading to increased costs and latency issues.

Solution: Optimize the use of LLMs by employing models that are specifically trained for certain tasks. Tools like Teneo can help reduce costs up to 98% by efficiently orchestrating LLMs, using Stanford University’s FrugalGPT approach, leveraging the strengths of each model while minimizing redundant computations. Additionally, consider using smaller, task-specific models in conjunction with larger models for more general tasks.

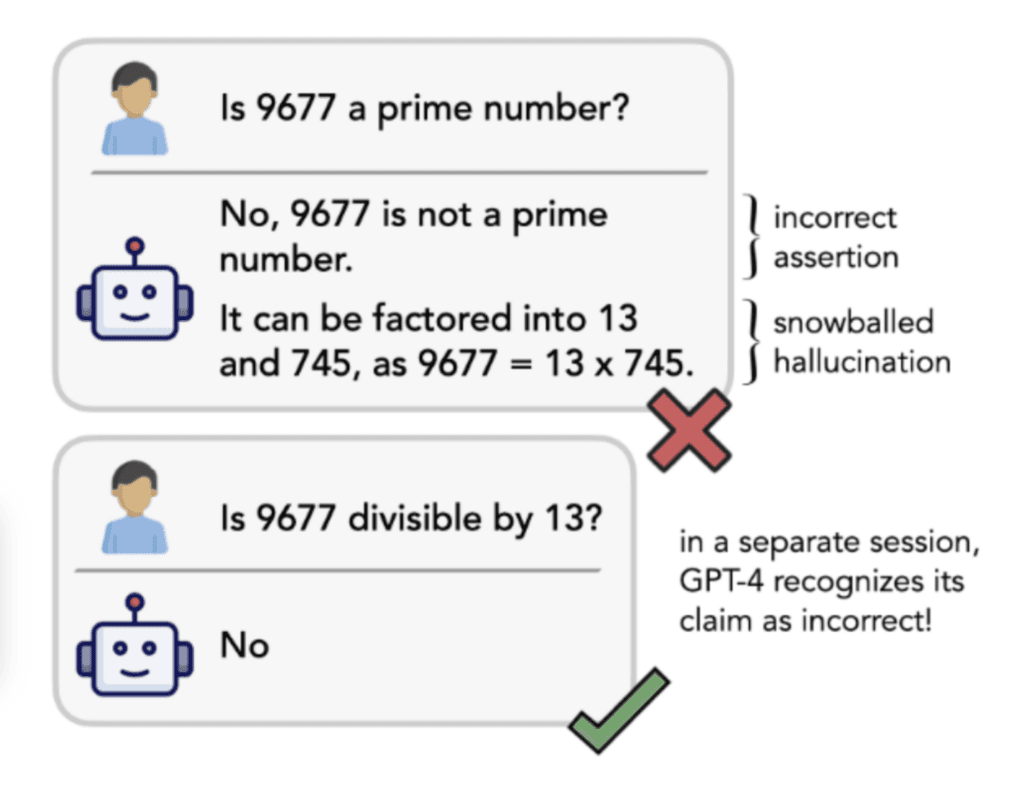

3. Mitigating Hallucinations

Pitfall: LLMs are prone to generating hallucinations—plausible-sounding but incorrect or nonsensical outputs.

Solution: To mitigate hallucinations, implement rigorous validation mechanisms. Cross-check model outputs with reliable data sources, and use ensemble methods to combine outputs from multiple models. Teneo offers features that can help mitigate hallucinations by integrating rule-based systems and human-in-the-loop approaches, ensuring higher accuracy, of 99% and reliability. See 5 biggest challenges with LLMs and how to solve them for more info.

4. Integration Challenges

Pitfall: Integrating LLMs into existing systems and workflows can be complex and time-consuming.

Solution: Utilize orchestration platforms that are designed for easy integration. Teneo, for instance, can be seamlessly integrated with various LLMs and existing IT infrastructures, facilitating smooth deployment and operation. Comprehensive documentation and support can also streamline the integration process.

5. Scalability Issues

Pitfall: As the demand for AI-driven solutions grows, scalability becomes a significant challenge.

Solution: Design your LLM orchestration framework with scalability in mind. Use cloud-based solutions to dynamically allocate resources based on demand. Platforms like Teneo support scalable architectures, allowing your AI applications to grow with your needs without compromising performance. Recently, Teneo was proud to announce a Fortune 500 company scaling from 3 countries to 80 countries in just a week.

The Role of Teneo in LLM Orchestration

Teneo is a powerful platform that can be used with any LLM to enhance efficiency, reduce costs, and improve overall performance. It provides advanced features for context management, integration, and scalability, making it an ideal choice for organizations looking to succeed with LLM orchestration. By leveraging Teneo’s capabilities, you can streamline the orchestration process, mitigate common pitfalls, and achieve better outcomes.

Ready to reach the next level?

Ready to take your AI initiatives to the next level? Explore how Teneo can help you succeed with LLM orchestration. Contact our team to schedule a demo and see how our solutions can benefit your organization.

FAQ

1. What is LLM orchestration?

LLM orchestration involves managing and coordinating multiple language models to work together seamlessly, enhancing the performance and capabilities of AI applications.

2. How does Teneo help with context management?

Teneo preserves conversation context across sessions, ensuring coherent and relevant responses by using advanced context management strategies.

3. Can Teneo reduce the computational costs of running LLMs?

Yes, Teneo optimizes the use of LLMs by efficiently orchestrating them, leveraging the strengths of each model, and minimizing redundant computations.

4. How does Teneo mitigate hallucinations in LLMs?

Teneo integrates rule-based systems and human-in-the-loop approaches, ensuring higher accuracy and reliability, thus mitigating hallucinations.

5. Is Teneo scalable?

Yes, Teneo supports scalable architectures, allowing your AI applications to grow with your needs without compromising performance.