Interactive Voice Response (IVR) systems have long been a staple in customer service operations, serving as the first point of contact for many customers. As technology evolves and customer expectations increase, traditional IVR systems are facing a challenge in delivering more. The answer to this challenge lies partly in leveraging Large Language Models (LLMs) to empower IVR systems.

LLMs, such as ChatGPT and Bard, are artificial intelligence (AI) models trained on vast amounts of data to understand, generate and transform human language.

When integrated with IVR systems, large language models have the potential to significantly improve both customer experience and operational efficiency, making LLMs a promising prospect for the future of customer service operations.

Leveraging LLMs: The Next Step in Customer Service

One of the key benefits of integrating an LLM with IVR systems is the ability to utilize GTP in agent-assist scenarios.

This would particularly help newer agents ramp up quickly, providing them with concise, summarized answers they might otherwise struggle to provide.

This feature not only boosts agent productivity and reduces the potential for escalation, but it can also minimize call duration and significantly enhance the overall customer experience.

Furthermore, LLMs are employed to expand coverage and enhance precision in intent recognition within IVR systems.

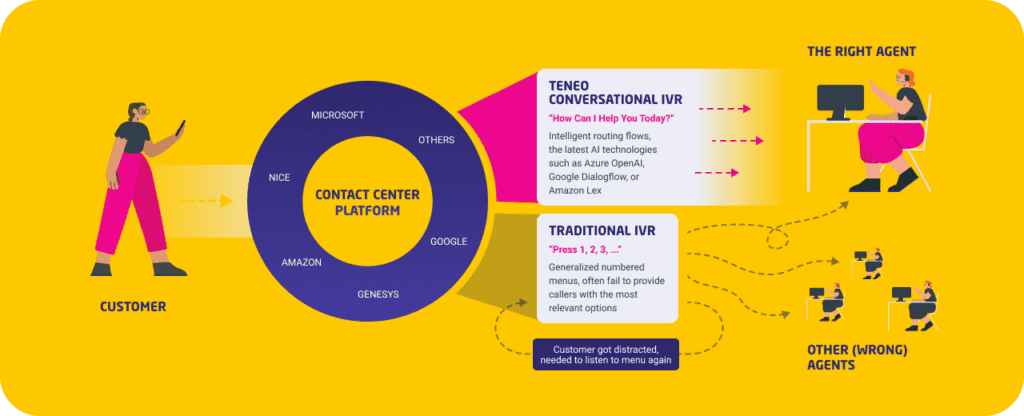

Systems such as Teneo Conversational IVR integrate with LLMs, incorporating intent and entity recognition, sentiment analysis, etc., to further refine the understanding of customers’ needs and ensure accurate routing to the appropriate agent.

This reduces the time required to deploy new functionalities, update existing ones, and respond to new scenarios, enabling organizations to quickly adapt to changing customer needs.

Transforming Customer Interactions with LLMs

The integration of LLMs in IVR systems is not only about operational efficiency, but it is also designed to significantly improve the customer experience. LLMs can generate dynamic responses, making interactions more engaging and personalized.

Additionally, they have the capability to incorporate company-specific information into generated answers, adding a touch of brand flavor to customer interactions.

Systems like Teneo Conversational IVR can combine LLM analysis with its own internal mechanisms, capturing subtle conversational nuances and allowing the IVR system to adapt and clarify when necessary.

This ensures accurate transcription and minimizes misunderstandings.

Another exciting feature of LLMs is integrated machine translation. With their advanced language understanding capabilities, LLMs can provide real-time translations, facilitating seamless communication between customers, automated solutions like Teneo Conversational IVR, and agents who speak different languages.

The Challenge of LLM for a Contact Center and for IVRs

Despite the numerous benefits of LLMs, like enhanced customer experience, operational efficiency, dynamic responses, and language translation, they also pose challenges.

A significant challenge is managing the subtleties and nuances of language. This includes dealing with ambiguous or unclear user queries.

LLMs often find it hard to accurately interpret short phrases and keywords. They also struggle to distinguish between similar intents with subtle differences in wording.

These issues can lead to misunderstandings or inaccuracies in the responses from the system’s virtual assistant or chatbot. Ultimately, this can cause dissatisfaction or frustration for the customer.

Subscribe to our newsletter

How are Challenges Solved with TLML?

The Teneo Linguistic Modeling Language (TLML) is designed to address some of these challenges. It operates as a deterministic language understanding system that identifies and interprets word patterns in a caller’s speech.

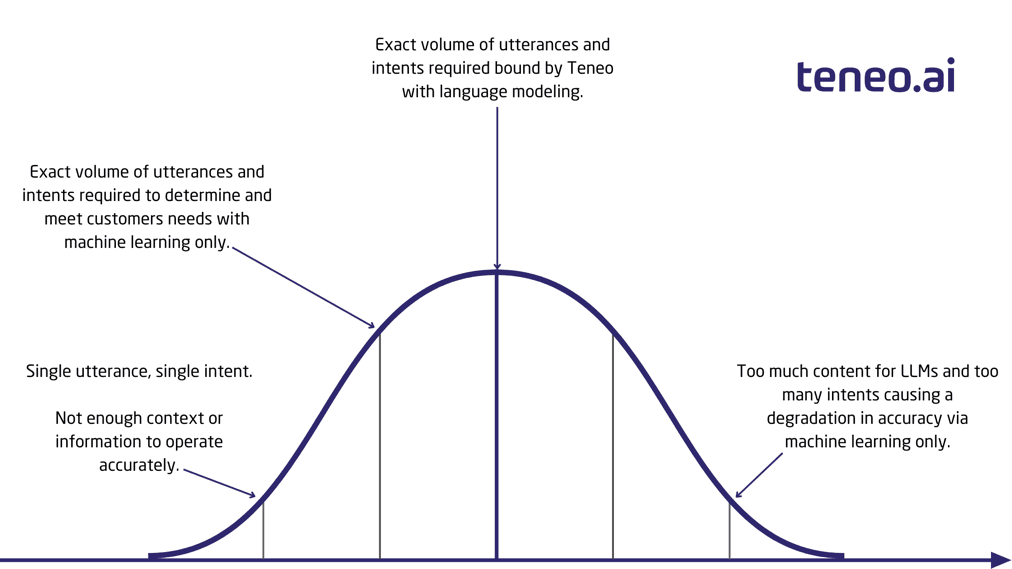

TLML works by providing an additional layer on top of traditional machine-learned models and LLMs, facilitating precise identification of user intents where machine learning alone might have difficulties.

It can differentiate between similar intents with minor differences in wording and effectively interpret short phrases or keywords.

Moreover, TLML enables information extraction directly from a user’s response. This capability helps in better understanding the user’s needs, personalizing responses, and improving the accuracy and overall efficacy of the system.

With TLML, you can harness the flexibility and power provided by large language models, saving time in building and maintaining content. This example shows how this is achieved:

Teneo and GPT

Why does a Contact Center need TLML when working with LLMS?

Contact centers require TLML when working with an LLM. This is because TLML enhances the LLM’s capabilities. It coordinates multiple methods to achieve the highest precision and improve the overall performance of IVR systems.

With TLML integration, the system’s comprehension and interpretation of language become significantly more precise.

Teneo’s TLML boosts overall accuracy. It incorporates LLM capabilities to improve intent identification. This makes it easier to understand and respond to user needs. It also ensures the system evolves smoothly as more intents and use cases are added.

TLML is a deterministic language understanding system. It identifies and interprets word patterns in a caller’s speech. This significantly improves the accuracy of LLM and Natural Language Understanding (NLU).

The language leverages the benefits and capabilities provided by large language models. This saves time in building and maintaining content.

As a result, contact centers can focus more on delivering exceptional service. They can spend less time on the technical aspects of system maintenance and updates.

Accuracy Booster

For Contact Centers, TLML is available through Teneo Conversational IVR

Teneo: Revolutionizing Customer Service

Teneo Conversational IVR is a plug-in built on Teneo. It revolutionizes customer service by replacing traditional keypad-based menus with natural language-based routing and automation.

It fully integrates with major Call Center platforms like Genesys, AWS, and Google. Teneo serves as a Conversational AI frontend. This allows callers to engage with a virtual assistant or agent that accurately routes them to the relevant queue.

Additionally, other customers can use Teneo along with other AI services in their Contact Center. These services include voice services like Speech-to-Text (STT) and Text-to-Speech (TTS). Teneo can even provide support for other conversational AI platforms.

How Teneo Conversational IVR Works

Teneo’s out-of-the-box Conversational IVR solution offers:

- Pre-built flows for direct routing and conversational clarification when users don’t provide sufficient information

- FAQ and common situation handling such as empty inputs, nonsense, user-requested repetition, and very long inputs

- Callback functionality, sentiment analysis, and summarization before handover to the agent

- Impacts key contact center KPIs by increasing NPS and automation while reducing misroutes, redials, and transfers between agents

- Teneo Conversational IVR also expands Genesys Cloud CX with ChatGPT features through the Teneo GPT connector

- ISO and SOC2 compliance with strong privacy protection, addressing concerns missing from GPT-based chatbot platforms

The transformative power of AI showcases the potential of such advancements in redefining customer service operations. The innovative combination of TLML and LLM in Teneo provides an effective and scalable solution to enhance IVR systems and create a seamless, personalized experience for customers.

Implementing AI-powered IVR systems is not just a technological upgrade; it’s a strategic move towards revolutionizing customer service and gaining a competitive advantage.

Revolutionizing Customer Experience in the Healthcare Industry with AI-Powered Contact Centers

If you are interested in exploring how AI can transform your customer service operations, we invite you to read our detailed case study.

This case study provides an in-depth view of how a leading global healthcare technology company successfully harnessed the power of AI through the Teneo Conversational IVR system, resulting in improved operational efficiency, enhanced customer satisfaction, and substantial cost savings.